The Unexplored Internet¶

Back to future¶

Before we describe the project in detail we would like to emphasis on important historical facts and to offer you to figure out some causality related to the creation and development of the Internet which will have a direct impact on our project and given that the main goal of Ace Stream is to «fix» (improve) the entire Internet. So, under Ace stream project we provide necessary technologies, tools and strategies to make the Internet what it was initially planned to be and what it should finally become!

"It seems unthinkable that the Internet Web is over 25 years old and most of us can barely imagine life without it. It was created by the efforts of millions. We all helped to build it and the future of the Internet still depends on us. We all need to use our creativity, skills and experience to make it better: stronger, safer, fairer and more open. Let's choose the network we want and thus the world we want", - Sir Tim Berners-Lee (the creator of Internet Web)

History and undisputed facts allow us to see the truth of reality and give us the understanding of ongoing and ability to extremely clearly see the options of the foreseeable future, identifying needed goals for us and choosing the best ways to achieve them.

«The full potential of the Internet is just beginning to emerge. A radically open, egalitarian and decentralized platform is changing the world and we are still just scratching the surface of what it can do. Anyone who is interested in the Internet and its everyone and everywhere play a certain role in ensuring that the Web achieves everything it can", - Sir Tim Berners-Lee

Then…

23 August 1991 British scientist Tim Berners-Lee changed the world with his "World Wide Web" system, opening the world's first website (info.cern.ch/). The world was gifted with the Internet (without patents, copyrights or trademarks).

The genius of such an invention as the Internet was not only in its social value, but also in its architecture and technological features which were laid down in the fundamental basis of its operation and development.

The World Web initially did not have a single center. One of the WWW key features was always considered the decentralization of peers. As the Internet grandparents (ARPANET and NSFNet Webs) it maintained operational sustainability, absence of geographical borders and network barriers. The HTTP protocol connects all computing devices on the planet with an Internet connection. In its work the HTTP protocol relies on many trusted servers which transform web addresses into network addresses of servers.

The logic inherent in the operation of the Internet implied the following: people who wanted to publish something online had to have their own web servers on their computers and the companies serving the Network had to perform exclusively technical functions, providing a reliable connection between all peers of the Network (building backbones and connecting the computers of Network users with a cable).

So, the Internet was originally created and operated as a peer (P2P, peer to peer) network where each user was a full network site (client/server). The user published info immediately on his computer and the other got this info directly from the publisher. Everyone can duplicate (copy/post) the received info on their computer and share it with others, thereby supporting its spread and providing to other users of the Web the ability to choose the most convenient and the fastest (the closest) source of data.

For example, in such a Web if your neighbor wanted to post his video he would not need to send it to the site/service of some intermediary whose service is on the other continent and in order to view it you would not need to download hundreds of megabytes or gigabytes from the other continent as it is done in centralized systems such as Youtube, Facebook etc but you would receive this video directly from this neighbor or from the another your close neighbor who watched and saved this video (who is in the same building, street, city or country as you).

Originally it was a platform which allowed everyone to exchange info directly and without any centralized services/servers of intermediaries. In such a peer-to-peer (P2P) network information that was in great demand was on a larger number of computers and less in demand on fewer computers and at the same time accumulated as much as possible on sites in those geographical points where it was most consumed and respectively all data transferred through the shortest routes without overloading with traffic international highways and other remote networks. The bandwidth of such a peer-to-peer network was primarily calculated by the total bandwidth of computers(hosts) connected to the Network and the increase in its performance was almost always proportional to the growth in the number of network users and the amount of information produced/consumed.

This is exactly the kind of unlimited Internet that its Creators gave the world!

«Internet is a radically open, egalitarian and decentralized platform»

Sir Tim Berners-Lee

Well as its said: «Other people’s things are more pleasing to us, and ours to other people»!

Evolution of the Internet Web or something gone wrong¶

It would be right to assume that the next evolutionary step in development of such a decentralized Internet would be creation of new and effective decentralized technologies which will maintain improvement and development of the Web in full accordance with the fundamental foundations (technological and social) laid down in it. And it is likely that if another prominent scientist from Chicago Ted Nelson could timely release his Xanadu project, which he created in 1960 with the goal of creating a computer network system with a simple user interface, we would now have perfect " Internet".

17 rules of Xanadu («Internet» project from 1960)

- Each Xanadu server is uniquely and securely identified.

- Each Xanadu server can be managed independently or internally.

- Each user is uniquely and securely identified.

- Each user can search, download, create and store documents.

- Each document can consist of any number of parts, each of which can be data of any type.

- Each document may contain links of any type, including virtual copies («im»plications) of any other document in the system available to the owner.

- Links are visible and traceable from any endpoint.

- Permission to refer to a document is expressly granted by the act of publication.

- Each document may contain an author reward mechanism with any degree of detail to provide payment for partial reading of the document, including for («implications») any part of the document.

- Each document is uniquely and securely identified.

- Each document can be protected for access control.

- Each document can be found quickly, stored and downloaded without the user's knowledge of the physical location of the document.

- Each document is automatically moved to physical storage, corresponding to the frequency of access to it from any given point.

- Each document is automatically saved with redundancy, allowing you to maintain access to it even in the event of a disaster.

- Each Xanadu service provider may charge its users at any rate they choose to store, search and post documents.

- Each transaction is safe and verifiable only by those who make it.

- The Xanadu client-server communication protocol is an openly published standard. Third party software development and integration is encouraged.

The presence of all these rules in the originally created Internet network would practically nullify all attempts to centralize the Network as there simply would not be any practical benefit.

This is how the Internet was supposed to be!

Why did it fail?

Nelson was a genius. Suffice it to say that he developed most of the principles used on the Internet today. But, like many brilliant people he probably had serious problems with the implementation of his ideas.

He promised to complete work on Xanadu first by 1979, then by 1987. And in 1987 he called the 1988 "deadline" for the completion of work.

In 1988 the promising project was bought by Autodesk.

Its owner John Walker considered that in «1989 Xanadu will become a product and in 1995 it will start to change the world».

Actually, it took more than half a century to implement Nelson’s ideas. Only in April 2014 an old but still full of enthusiasm pioneer conducted the presentation of the final version of his creation OpenXanadu in Chapman University, California.

Had the discovery of the creator of hypertext happened in the late seventies or even in the mid-nineties, we would now be using a completely different Internet. But Nelson demonstrated his «magical mansion» too late. Humanity has been living for 30 years with the Web which is using TCP/IP protocols, HTTP, HTML language and URL identifiers.

Sir Tim Berners-Lee

The good news is that originally in the Web structure were laid down openness and flexibility. The protocols and programming languages under the hood, including URLs, TCP/IP, HTTP, HTML, JavaScript and many more have almost all been designed for evolution, so we can modernize them as new needs, new devices, and new businesses models emerge, eliminating current limitations

Generally, while Autodesk leaders were persistently and unsuccessfully trying to get the job done from the Xanadu creator, employee from European lab CERN Tim Berners-Lee proposed his global hypertext project which was called World Wide Web and which was a simplified version of Xanadu. It did not have the capabilities that would initially make the Network simpler and easier to use and the use of many centralized technologies would not be reasonable.

Taking into account that created Web (WWW) in its initial view required from users a good understanding of technologies and the subsequent implementation of necessary decentralized technologies for efficient work in such Internet Web was a very difficult task and in order to design it would take lots of years, the Internet development chose simpler way both programmatically and ideologically as well as in the completely opposite direction. The active promotion of the original principles of decentralization which were the fundamental basis of Timothy Berners-Lee creation did not fit into the commercial companies conservative policies that decided to use the network for commercial interests and for which centralization is the only and understandable model of work. Additionally, it was the simplest technological solution to implement in order everything worked correctly. Of course, everybody knows and knows that speed and effective scaling as well as privacy and transparency are key components of the Web but at the end of the day money does make the world go round.

As the Web grew, companies emerged that took over the technical support of web publishing and communications. One of the first popular centralized apps (services) became MySpace and Yahoo. Using the Flickr app photographers could easily post their photos on the Internet and share them with others. YouTube did the same with videos and tools as Wordpress and Wiki allowed everyone to have their own blogs and/or take part in mutual creation of public info knowledge databases. In particular, social networks allowed everyone to be online in order to chat and exchange info with other users in a convenient way. All this was carried out through the websites and services of intermediaries who provided the necessary infrastructure for this and formed the basis of their central servers. Commercial companies started to take control of all users' publications, tying all communications and any information exchange between users to their services/servers. Such active commercial integration into the Internet and together with the development of centralized technologies and convenient services began to drastically change the fundamental principles and logic of the initial Internet, so it was subjected to a serious and very dangerous change in the logistics component of the Web.

In contrast to the logic of the original (decentralized) Internet, which implied user management of all their information and publications with the ability to transfer data to each other along the shortest route (path), in the new logic of the Network were laid cardinally opposite logistic mechanisms. Accordance to which, if your neighbor wants to show you his video, at first he needs to send in to the intermediary's server, which could be located on another continent and to watch this video you need to download it to yourself directly from the intermediary's server, but not directly from the neighbor or from any then your other close neighbors who have already watched this video. Besides, in order to get the highest profit intermediary’s services strictly prohibited you to save (to duplicate) this video to your computer and if you want to watch it again or share it with others, you need to download it again from the intermediary's server and as a result, everyone repeatedly pulls the same video directly from its server located on another continent.

In the end, world has got "the Internet in reverse" which was called by many as Web 2.0 and the essence and logic of which is as follows: the more people sit on one web resource and central servers of the intermediary, carrying out all their communications through it (receive information, post their publications, exchange data, chat, etc.), the better ! Eventually:

Cory Doctorow (Cory Doctorow), the director of the European branch of a human rights organization Electronic Frontiers Foundation

During the last 20 years we managed to practically destroy one of the most functional distributive systems ever created and it's the modern Web

This statement may seem very weird, since for many the Internet has become an integral part of modern life. It is a portal through which we learn news and find entertainment, keep in touch with family and friends and gain free access to more information than anyone who has ever lived before us. Nowadays the Web is possibly more useful and available for people than ever before. However, people like Sir Tim Berners-Lee (Sir Tim Berners-Lee), the creator of World Web and Vinton Cerf (Vinton Cerf) who is often called as one of the «Internet fathers», in Doctorow’s comments see the core of the issue and consequences which are unknown and unclear for majority: Internet is developing not the way they imagined! Using the Network in a centralized mode negatively affects its performance as a whole, creating a colossal shortage of bandwidth and leading to the fragmentation of a single and open network for all into private corporate segments (private networks over the Network), with limited capabilities and factors of lack of network neutrality, transparency and privacy.

At the same time, it should be noted that along with a complete disregard for the principles of decentralization in the technological part (in the network architecture), the concept of Web 2.0 raised social activity to a high level, thereby stimulating the formation and development of a culture of social decentralization. Internet users have the possibility to mutually take part and actively influence on content, thereby forming the so-called «editable informative web» and «collective mind». But unfortunately, when such significant social components are formed on the side of some centralised (controlled by someone) services, then this is nothing more than an illusion of freedom and decentralization, which at any moment can become an ideal tool for manipulating the consciousness of the masses, far superior in its capabilities to any other media ever created in the entire history of mankind.

Sir Tim Berners-Lee

Although industry leaders often stimulate positive change, we must beware of concentration of power as they can make the Web fragile.

And maybe if traffic was limited only with text and images, then possibly discussions about «decentralization» and «centralization» would not make any sense for most Internet users and it would likely be completely ignored by business. The performance of the Network with such content most likely would fully satisfy the needs of most of its users and the problems of centralization would be exclusively in the social sphere and legal aspects of protecting user rights, reliability and confidentiality of information, as well as in questions of information control and monopolization of the Network by corporations. But, the emergence of heavy content formats (video, audio, games, etc.) on the network, along with their popularization and a significant increase in the operation of the Network in end-to-end data transmission with centralized online broadcasting (VoD and Live Stream), began to actively lead to the loss of significant part of the potential and performance of the Network, leading to a threat to its integrity. And the consequences of this already directly concern everyone and therefore everyone should also have an interest in solving these problems!

Sir Tim Berners-Lee

We risk losing everything we have gained from the Internet so far, and all the great achievements that are yet to come. The future of the Internet depends on whether ordinary people will take responsibility for this unusual resource and challenge those who are aiming for manipulating the Web against common good.

VIDEO CHANGES INTERNET!¶

A ravenous appetite for digital video in preference for VoD (Video on Demand) and Live Stream (live streaming) has changed the Internet a lot!

Few factors which played a significant role in growth of video traffic consumption:

- Emergence of popular OTT content from Hulu, Netflix, Amazon, YouTube, Twitch and others

- Increasing popularity of events in live streams such as sport events and concerts.

- Popularization and active growth of placement of amateur videos and web broadcasts on the Web.

- Ever-growing high definition videos - HD, 4K, 8K, 360, VR and more.

- Significant growth of connected home screens (TV, STB) and mobile devices.

- Faster internet connection speed

- Increased number of operators covering first screen content delivery over IP rather than traditional QAM

Now!

Today almost no one can imagine the Internet without videos and videos without the Internet!

The volume of video traffic on the Internet, in total for all forms of consumption (VoD, Live Stream, P2P, HTTP Sharing, IP-TV, etc.) has been about 90% of all existing traffic!

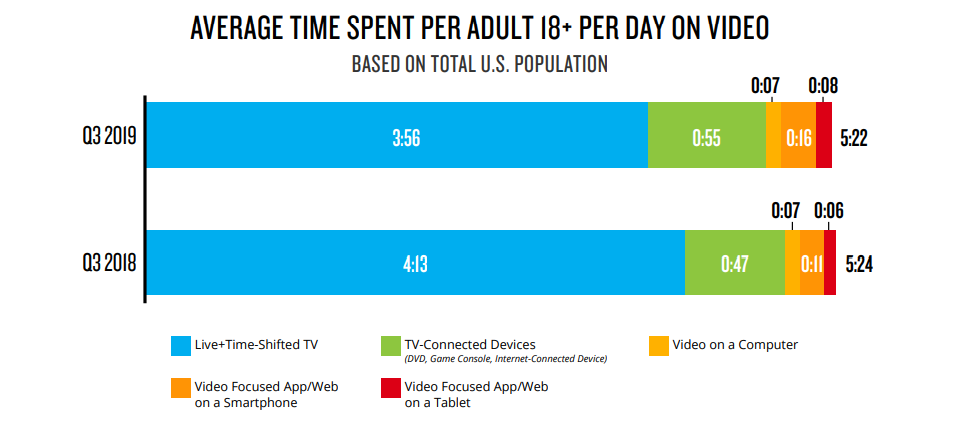

According to Nielsen's estimates, video consumption was over 5 hours per day per person.

Nevertheless, viewers have never before had so many options to connect to streaming content on their TV. It can be a compatible multimedia device (for example, Apple TV, Google Chromecast, Amazon Fire TV, Roku etc.),game console or smart TV. The emergence of these devices satisfy the need of consumers to get access to content at the click of a button with a much greater variety of content choices and with wider functionality and convenience (Pause, TimeShift, TV Program Archives, SVoD, etc.)

Several billions of all kinds of 4K devices have already been sold and the penetration of 4K TVs worldwide has reached over 50%.

Facebook executives talk about completing the written word as part of a business plan, and that the future of the social network lies in video and increasingly immersive video formats like VR 360. As Mendelssohn said, “Text won't disappear entirely,” adding “You have to write for video "and" If I were placing a bet, I would say: video, video, video. "

https://qz.com/706461/facebook-is-predicting-the-end-of-the-written-word/

In the end, the Internet is doomed for being a modern video platform for global streaming (from amateur video clips to professional content)!

But the question arises: To what extent can the Internet in the existing centralized mode of operation meet the real demand for video viewing and the current needs of its users?

Maria Farrell, a former senior executive officer of ICANN, the organization that manages the Internet's domain name system, speaking about the challenges caused by the centralization of the Internet, points out that the picture is largely hidden from the common man. Average user thinks that everything is great and he can watch football», - she said. But, here arises «But…»?!

Centralized Internet broadcasting has incredibly limited bandwidth, disproportionate costs and is significantly inferior in terms of the quality and stability of live broadcasts, relative to distribution channels such as cable and satellite networks which makes such broadcasting practically uncompetitive (unsuitable for the distribution of expensive and demanded live broadcasts of sports events and other linear content).

Thus, bridging the technological gap between limited capabilities of Internet infrastructure and demands to its performance has a significant meaning in future growth and success of Internet and its resiliency both for common users and for business.

Challenge to THE ENTIRE INTERNET¶

1. Immense shortage of bandwidth of the Internet Web¶

As of May 5, 2021 the international bandwidth is 2,000 Tbps and two-thirds of which is used by Google, Facebook, Amazon and Microsoft. (According to TeleGeography)

The bandwidth of the three largest international CDN operators that openly published their data: Cloudflare - 20 Tbps; Fastly Inc - 25 Tbps; Limelight Networks – 35 Tbps

It’s too too much

However, if somebody decided to hold a global live streaming in a quality from 720p to 2160p (4K), HDR, at 60 frames (look https://support.google.com/youtube/answer/1722171), using current standard (Unicast) techs, then it would be a catastrophe!

Using the entire bandwidth of the international Internet and the three largest CDN operators, only about 60 million viewers (about 1.3% of all Internet users) could simultaneously watch such a live broadcast

Sadly, even the Internet has its limitations!

For instance, we will take the opening ceremonies of the Olympic Games which are watched by over 3 billion people on linear (cable/satellite) TV!

In order for all these viewers to be able to watch the opening of the Olympics on the Internet in good (mentioned before) quality, using standard technologies (unicast), the bandwidth and capacity of the Internet (including CDN operators) must be 50 times more than the existing one!

According to Akamai estimates, at prime-time the number of viewers who want to watch sport internet broadcasts in live reaches 500 millions. “With 500 million online viewers, we need 1500Tbps (to provide a broadcast with a bitrate of 1.5 mbps, which is 10 times less than what is needed to broadcast in HD1080p). Today we have 32Tbps, so you may see a big gap which we need to bridge», - says Akamai's director of product and marketing, Ian Manford.

As a result, in order to meet the real demand and needs of Internet users, based on the calculation of the simultaneous connection to the Network of 70% of Internet users to watch online video, in quality from 720p to 2160p (HDR, 60 frames), with an equal proportional distribution of views in the indicated formats, the need for Internet bandwidth will be over 100,000 Tbps

Summary:

The shortage of the Internet Web bandwidth is over 100000 Tbps

It is necessary to increase the existing network bandwidth by more than 50 times to meet the current needs of Internet users in online viewing!!!

2. The Internet does not provide any guarantees of reliability and performance when operating in a centralized data delivery mode.¶

As it was designed as an open, equal and decentralized platform, the Internet Web does not provide any guarantees of reliability and performance when operating in a centralized data delivery mode. In contrast, end-to-end Internet wide-band communications are subject to a number of bottlenecks that negatively impact performance, including latency, packet loss, network crashes, ineffective for such aims protocols and internetwork problems.

Now for more details:

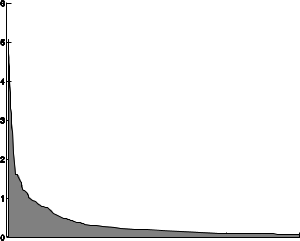

Although the Internet is defined as a whole, it is now actually made up of thousands of different networks where each of which provides access to a small percentage of end users. Even in the largest network, the coverage is no more than 5% of the available Internet traffic (lk. img).

In fact, over 650 networks are required to reach 90% coverage. This means that content hosted on a central server must transfer through multiple networks in order for it to ultimately reach end users.

In practice, internetwork is not efficient or reliable as it can be negatively affected by a number of factors. The most significant are:

Peering (data exchange) point congestion (Peering point congestion).

Peer-to-peer performance where network traffic is exchanged, typically lags well behind demand which is largely due to the economic structure of the Internet. Money accumulates on the “first mile” (that is the website) and the “last mile” (that is the end users), thereby stimulating investment in the infrastructure of the first and last mile. Nevertheless, there is little economic incentive to invest in the “mid-mile” as it is a high cost, zero-income, where networks are forced to partner with competing organizations. Thus, these exchange points become bottlenecks which cause pack loss and increase latency.

Inefficient routing protocols. (Inefficient routing protocols)

Routing protocol BGP (Border Gateway Protocol). Despite it working great with Internet scaling, BGP has a number of limitations. It was not designed for maintenance of effective productivity. For example, few routes between locations in Asia are basically going across peering points in the US, which increases latency a lot. Besides, when routes stop working or connectivity deteriorates, then BGP can slowly form new routes. BGP bases its routing computations on information provided by its neighbors in cyberspace, which in turn gather information from their neighbors in cyberspace, etc., without knowing anything about topologies, delays, or congestion of the underlying networks in real time. This works well as long as the information in BGP messages called “advertisements” is accurate. Any fake information can spread almost instantly over the Internet, because there is no way to verify the honesty or even the identity of those who send such messages. This works well as long as the information in BGP messages called “advertisements” is accurate. Any false information can spread almost instantly over the Internet, because there is no way to verify the honesty or even the identity of those who send such messages. Ordinary human mistake or deliberate actions by intruders; misconfigured or captured routes can quickly spread across the Internet, causing route reversals, bloated paths, and even widespread disconnections.

Examples of capture: in 2008 Pakistan government decided to block YouTube on its territory because of video with the prophet Muhammad. Erroneous BGP commands resulted in the traffic of most YouTube users being redirected to Pakistan, leaving the site down for about two hours. In 2010 because of an incorrect command sent by Chinese TV operator China Telecom, traffic of the US military departments went through China for around 18 min and nothing could disturb to capture it. Finally, in 2014 intruders redirected traffic of 19 providers to Canada to steal Bitcoins. And it’s just a few cases but most often no one pays attention to errors in BGP work.

At the same time, the role of BGP should not be underestimated due to the fact that it does not fit into the picture of a centralized Internet. Thanks to this protocol, the Internet remains a decentralized global network and continues to grow!

Unreliable networks.

The Internet is experiencing disruptions all the time caused by a variety of reasons: disconnected cables, misconfigured routers, DDoS attacks, power outages, even earthquakes and other natural disasters. While disruptions vary in scale, large-scale events are not uncommon. In some cases troubleshooting can take several days.

Inefficient network protocols.

While TCP protocol is designed for reliability and congestion avoidance, it carries significant overhead and can have suboptimal performance for high latency or packet loss links that are common on wide-band Internet. The mid-mile congestion problem deepens the problem as packet loss causes TCP retransmissions, further slowing down the communication.

TCP is a major bottleneck for video and other large files. Since confirmation is required for every data packet sent, bandwidth (when using standard TCP) depends on both network latency and RTT (round-trip time). TCP is a major bottleneck for video and other large files. Since an acknowledgment is required for every data packet sent, throughput (when using standard TCP) depends on both network latency and RTT (round-trip time). Thus, the distance between the server and the end user can become a major bottleneck in download speed and video viewing quality. The table below illustrates the dramatic results of this effect. For example, online streaming in HD is not possible if the server is not nearby.

| Distance

(Server to User) |

Network RTT | Typical Packet Loss | Throughput | 4GB DVD Download Time |

| Local:

<100 mi. |

1.6 ms | 0.6% | 44 Mbps (high quality HDTV) | 12 min. |

| Regional:

500–1,000 mi. |

16 ms | 0.7% | 4 Mbps (basic HDTV) | 2.2 hrs. |

| Cross-continent:

~3,000 mi. |

48 ms | 1.0% | 1 Mbps (SD TV) | 8.2 hrs. |

| Multi-continent:

~6,000 mi. |

96 ms | 1.4% | 0.4 Mbps

(poor) |

20 hrs |

Economic scalability.

Economic scalability means the presence of enough resources to meet instant demand both during planned events or unexpected periods of peak traffic. Scaling up a centralized infrastructure is costly and time-consuming, as well as it is difficult to predict capacity needs in advance. Lack of necessary resources on the part of broadcasters leads to consumer disappointment and potential loss of business, but extreme excess means wasting money on unused infrastructure.

At the same time, it should be well understood that scalability means not only providing sufficient performance and bandwidth of outgoing servers, but also sufficient bandwidth throughout the network, to all end users/consumers. This is a very, very serious problem! Fixing this problem is now the most urgent task and the solution is being actively pursued by all companies who want to use the Internet as a professional content distribution system.

3. Large expenses for the implementation of online broadcasts¶

For the relatively normal operation of OTT services and high-quality broadcasts (VoD and Live Stream), you need to create your own CDN infrastructure or use a third-party one.

In the CDN structure, original media content is taken and copied to hundreds or thousands of its servers which are installed around the world. So when you log in from Amsterdam, instead of connecting to the broadcaster's main server located in the United States, it will download the same copy from the server in Amsterdam or whichever is closest to Amsterdam. This does not load international and interregional networks and by reducing the distance between the server and the client, it makes the connection more stable and significantly speeds up data transfer (increases the download speed for end users).

It is CDNs that are the reason that broadcasts of services with a huge number of users, such as YouTube, Twitch, Facebook, etc., can now be watched by users in different countries of the world in good quality and with minimal delays.

Creating your own high-quality CDN infrastructure, corresponding in level and bandwidth for example Akamai, will cost several billion $ + million monthly maintenance costs, and it will take a lot of time to deploy it, and all this in order to be able to implement one global webcast in HD quality (10 mbps) for 10 million concurrent viewers located in different parts of the world (not defined geography). At the same time, there will also be an urgent issue of economic scalability (capacity planning, flexibility, quality), in other words: it will be necessary to solve the problem so that excessive provision of resources does not lead to unnecessary costs, and insufficient provision does not lead to low quality and service interruptions, and the problem is that it is difficult to predict whether traffic for certain events will increase 2 or 10 times higher than normal volumes. As a result, such a solution (creating your own CDN) is relevant only for the largest international OTT operators (for example, YouTube, Netflix, etc.), and is absolutely not suitable for international services that do not have such a large audience and is also not suitable for organisers broadcasting some infrequent large-scale events, for example, such as popular sports events, concerts, natural or man-made disasters or important local or world events, etc. etc.

One interesting fact: Netflix, with about 100 million users from 190 countries, originally created its own infrastructure from a huge server fleet. While Netflix engineers wrote hundreds of programs and deployed them on their servers, running over 700 micro-services managing each of the many parts of what makes up Netflix's infrastructure, it turns out that all of this is not enough to get the needed performance. And to increase performance, they decided to connect the infrastructure of Amazon Web Services (AWS), and subsequently in addition to AWS, the networks of several other commercial CDN operators, such giants as Akamai, Level 3 and Limelight Networks were also connected to increase performance. But this was not enough, but more on that later (on the problem of the "last mile")

When using third-party CDN operators, such as Akamai, etc., you need to focus on their free resources (with the need to pre-reserve them for broadcasting some large-scale events) and the cost of their services.. For example: with an average price of a CDN service $ 0.05 per 1 GB, the cost of 1 hour of broadcasting for 1 million concurrent viewers in HD (with a bitrate of 10 mbps) will cost the broadcaster $ 225,000. At the same time, the problem of limited bandwidth of existing standard CDN operators does not go away.

4. The problem of the "last mile" and without fixing it is impossible to provide high-quality broadcasting¶

«Last mile» is a part of the Web, which physically reaches the end user (channel connecting end (client) device with ISP net)

In a structure where content delivery is carried out exclusively through a centralized CDN and there is no physical presence at the "last mile" (there is no direct access to the ISP network to which the viewer is connected), there is no way to provide a guaranteed and stable data transfer rate appropriate to the level of the client's network connection to their Internet Service Provider (ISP). For example, if you have 100 Mbit/s port, it does not mean the speed for every user of the Web, since the free bandwidth of the trunk channel at the time of data transfer can be only 10 Mbit/s. Therefore, in order to get rid of claims from customers, the broadcaster has to make excuses to its clients/viewers, stating that they would be fine with the playback of content if their ISP was directly connected to the CDN network serving the service/broadcaster.

For example, in this case in order to get rid of claims from clients who has 4K TV and connection to the Internet at a speed of 100 Mbit/s and cannot understand why they have to watch videos in low quality with bit rate of 2 Mbit/s, instead of declared 4k), Netflix maintains its index of the speed of Internet providers by creating a special page for this on its resource (ispspeedindex.netflix.com), and with a constantly publishes speed indices on his blog so that consumers can see what speeds their providers give for Netflix streams.

There are many reasons why CDN servers are located in large, centralized regional data centers rather than very small points of presence (PoP). Large content libraries which are used for VoD delivery are good enough for that. Still, in order to maintain high quality and stability of linear content (live streaming) or popular VoD episodes there is a need to transmit content from CDN resources, which are located in small PoP and being as deep as possible in the network to be «closer to the viewer». Usually, operators must identify suppliers that can help them ensure consistent high quality, as well as increase granularity and flexibility.

Joe McName, executive director of European advocacy group EDRi, noted that even if video service owners are building their own parallel infrastructure (CDN), their vertical integration efforts cannot extend to control the "last mile." And if there is no “last mile” control, then there is no guarantee of stability of streams for end users of the Internet (Viewers).

However, in terms of cost, software and operational complexity, it can be difficult to deploy and manage many widely applicable servers. The task is that broadcasters and their CDNs need to find a way to locate their dedicated or virtual servers "closer to the viewer", and this point is already the ISP infrastructure (Internet Service Provider the Viewer uses), so this already means that you need to individually negotiate terms with each ISP and face additional expenses.

For example, to solve this task Netflix implemented a project called Open Connect and the aim of it is creation of one more CDN layer with direct integration into Internet-provider (ISP) infrastructure. https://openconnect.netflix.com

Also, it is very important to understand that if the broadcaster has a large audience of viewers and traffic that will compile a significant % of the total volume of all network traffic of any ISP, then there is a risk of such traffic being cut by the Internet provider, which will significantly affect the quality of broadcasts. And in some cases, a complete blocking of access to such a service by the broadcaster may even occur, and the ISP will demand some kind of compensation from the broadcaster which will ultimately also lead to additional costs, even if they were not initially planned.

Perhaps for those who think that Internet providers should not care about this and they should not even be interested in what volume and what subscribers are transmitting, such an argument will seem insignificant. But in reality it is a very meaningful argument as basically subscribers are not provided with guaranteed designated Internet channels, and by doing appropriate redistribution of traffic, Internet providers make business and subscribers get inexpensive tariff plans.

The conflict between Netflix and ISP Verizon can provide us as an illustrative example of the reality and relevance of this problem. The essence of the conflict was Verizon blocking Netflix services to its customers, claiming that Netflix abused its position as a heavyweight internet traffic sender (accounting for 30% of peak traffic at the time) and demanded compensation from Netflix for providing such bandwidth.

In fact, in order for a broadcaster to achieve a good level of performance in a centralized architecture, it is practically necessary to build its own private, separate Internet network. And this is one of the possible solutions. But who can afford such a decision and what will it lead to ?!

In case that ISP services begin to massively provide directly to companies that own all kinds of their own VoD, Live Stream services (for example, such as Google, Facebook, Amazon, Apple, etc.), and this is what they strive for (seeing this as one of the options for the optimal solution to the "last mile" problem and the possibility of complete control of users' surfing on the Web), then there will also be a conflict of business interests. It will probably be unnecessary to explain what kind of traffic will be preferred in such a situation. In this case, we can forget about any net neutrality, and respectively the only one who can provide high guarantees of the quality and stability of the broadcast will be the one who owns the ISP!

Do you want you to once have to make a choice between Netflix or YouTube or Apple TV, etc., understanding that by making a choice in favor of some ISP, you can normally use only one of these services?! Do you need the Internet from Google in order not to have problems with the work of its services or the Internet from Facebook in order to use its services normally and do you need such an Internet at all where you need to make such a choice?!

Joe McName

What will happen when you have a large CDN infrastructure and access to the last mile? You will get to another world

This situation demonstrates how serious and big the problems can be for broadcasters without access to the last mile, and how gloomy it can be when someone has monopoly power over the last mile.

5. Low stream stability¶

Placing servers in close proximity to end users significantly increases the stability and outgoing bandwidth of the entire system, but this does not completely solve the problem of achieving a level of quality and stability that is not inferior to such distribution channels as satellite and cable TV.

The thing is that during the usage of centralized technology (Unicast) the Viewer gets the stream from one single server and in total with previously mentioned problems and limitations it can significantly influence the stability of broadcast in case of any failures on server or route side.

Standard Unicast technologies do not allow to implement complex scenarios of data processing and redistribution of video streams in a manner which will not cause any latency of live streaming or decline in the quality in case of any problems with net nodes or routes but respectively using such a technology there cannot be any guarantees of stream stability.

Simply speaking, in order to compete with such distribution channels as satellite and cable TV, the only reason for stopping the stream should only be lack/disconnection of the Internet!

It is possible to achieve appropriate guarantees of stream stability only by means of a technology that allows loading data into several streams, simultaneously from several servers and along different routes, which will completely solve possible problems on some of the servers or routes. But such an implementation will no longer be Unicast, but P2P, and that's a completely different story.

6. Huge environmental damage from a centralized streaming industry¶

About 90% of Internet resources and data centers work for the streaming industry to provide about 1% of the real needs of users for online viewing. Accordingly, in order to cover the bandwidth deficit using standard methods, it is necessary to increase the capacity of the centralized infrastructure hundreds of times. At the same time, data centers already use more than 2% of the world's electricity and produce the same amount of carbon emissions (over 650 mln t/h. CO2), already five times higher than CO 2 emissions when mining Bitcoins!

According to experts, within a decade data centers and digital infrastructure with the most energy efficient technologies will account for up to 20% of global electricity consumption and 5.5% of emissions CO2

How are things going IN PRACTICE??¶

In practice as usual it is more complex and less predictable than in theory!

To better understand how the identified network problems really affect broadcasts and how efficiently or ineffectively existing standard solutions (unicast) work, as well as how significant it can be, especially for live broadcasts of sports events, here are some historical examples:

A very significant event for Amazon, such as the first live broadcast of a sports match went through serious problems (match Chicago Bears-Green Bay Packers )

After Amazon, the next match was broadcast by Yahoo and there were problems too (match NFL, between Baltimore Ravens and Jacksonville Jaguars)

And almost all companies, including Apple, face the problems of large-scale live broadcasts (many still remember the failure of the broadcast of the new iPhone 6 Plus presentation which practically opened a new era of smartphones).

Probably a valid and undeniable indicator of existing problems may be the result of a very significant sport event in the world with a huge number of people willing to watch it live and for viewing which its exclusive broadcaster in the United States requested $ 100. Perhaps, only few people will accept that there can be some problems with the broadcast for which they pay $ 100?!

On August 27, 2017, Las Vegas hosted a duel called the most anticipated fight of the century and the most iconic event in modern boxing - 12-time world boxing champion Floyd Mayweather Jr. faced mixed martial arts fighter Conor McGregor in the ring. The live broadcast was carried out using the pay-per-view system with a price per view in normal quality - $ 89.95, in HD - $ 99.95.

Result: Disappointment of large amount of people!

After all, live broadcasts are not distribution of static video files (no movies)!

And if such problems with live broadcasts are observed among the largest Internet giants which have multi-billion dollar CDN infrastructures and even with a clearly predictable number of concurrent viewers at an incredibly high cost of access to view the broadcast, then the question arises: - Is it possible to provide via the Internet at all high-quality stable broadcast and even for an unlimited (not predictable) number of viewers?

Before getting an answer to this question, it is important to note that in fact the "problem" is not in the Internet itself, but in how it is used. All of the above problems are irrelevant for peer-to-peer (P2P) networks with a decentralized data storage and delivery system, which clearly indicates how unnatural centralization is for the Internet and how difficult it is to achieve acceptable levels of performance, reliability and economic scalability with this mode of operation.

That's the reality!

Now you already know exactly what the limitations are and how much the existing Internet bandwidth can meet the real needs for Internet viewing, using standard (Unicast) online broadcasting technologies!

But there is a solution!¶

All that is needed is to finally start using the Network exactly as it was intended to be used initially (when it was created), taking into account its architecture, emphasized on peer-to-peer (P2P, Peer to Peer) and maximum decentralization

Briefly about P2P tech¶

Peer-to-peer, short for P2P network, is a kind of computer network using a distributed architecture. It means that all computers or devices which include it are providing and using resources all-together. Computers or devices which are a part of the peering system are called peers. Every net node of a peer-to-peer system or peer equals to other peers. There are no privileged users as there is no centralized administrative device. Thus, and only under such conditions, the network is considered decentralized.

In some way, peer-to-peer networks are socialist networks in the digital world. Every network user is equal to others and have the same rights and responsibilities as others. Every device (node/peer) connected to P2P network is considered both as a client and as a server and Каждое устройство (узел/peer) подключенные к одноранговой Сети является как клиентом, так и выполняет функции сервера, thereby not only consuming the resources of the Network (other participants), but also providing the Network (other participants) with its own resources (hardware and network). Every resource available on the peer-to-peer network is shared by all nodes without the involvement of a central server. Shared resources on a P2P network can be:

- Processor power

- Disk space

- Network bandwidth

How P2P Network Works¶

The main purpose of peer-to-peer networks is to share resources and work together between computers and devices, to provide a specific service or perform a specific task. As mentioned earlier, a decentralized network is used to share all kinds of computing resources, such as computing power, network bandwidth, or disk space.

The most common use case for peer-to-peer networks is Internet file sharing. Peer-to-peer networks are perfect for Internet file sharing (distributed file storage and transfer) because they allow the computers connected to them to receive and send files simultaneously using multiple sources.

Consider a situation: when visiting a video hosting site (for example, like YouTube), you download a video file (yes, if you did not know, when watching any online video on the Internet, you always download a video file to your device). In this case, the video hosting service acts as a server, and your device acts as a client receiving a video file from the video hosting server.You can compare this to a one-way traffic on a single-lane road: an uploaded file is a cargo intended for you, carried by one single car that goes from point A (video hosting) to point B (your device), and on a single-lane road, where the most a minor accident or traffic jam leads to a significant delay in the cargo delivered to you, and in the event of a serious accident or traffic jam on the road, the cargo is re-sent along a new road, but along a different route, where the same problems may occur, which may eventually lead to significant decline in the quality of such a service and damage to the delivered cargo (stops for buffering, a decrease in the visual quality of online video or the impossibility of playback all).

If you download the same file over a peer-to-peer network using P2P protocol for data transfer, the file download is done differently. The file is downloaded to your device in parts, simultaneously from many other devices that already have the parts of the file you need, and the parts of the file you downloaded (usually 16 kb in size, for example, for the BitTorrent protocol) are combined into the source file you need. At the same time, parts of this file are also sent (downloaded) from your device to other network participants who request it. This situation is similar to a two-way road, with an unlimited number of lanes, and even with the simultaneous delivery of data (parts of a file) along different routes: the file (cargo) you need is duplicated many times, divided into parts, loaded onto numerous cars and sent to you simultaneously by multiple routes where the roads have an unlimited number of lanes. In turn, you also become an active participant in such a logistic movement, and already in the opposite lane send the cargo you have in your possession (copies of the parts of the file you received) at the request of other participants (users) of the Network.

The reference peer-to-peer (P2P) network is unlimited in scalability, without shortage of resources and bandwidth, and does not harm the environment!

The presence of an effective P2P protocol and a reliable integrated solution, with the possibility of its smooth implementation and use in the data delivery system, on top of the existing Internet infrastructure, is the only solution to all the identified problems related to standard Unicast technologies!